Popular Libraries

PyTorch

Create Subscriptions

In the Initializeinitialize method, subscribe to some data so you can train the torch model and make predictions.

# Add a security and save a reference to its Symbol.

self._symbol = self.add_equity("SPY", Resolution.DAILY).symbol

Build Models

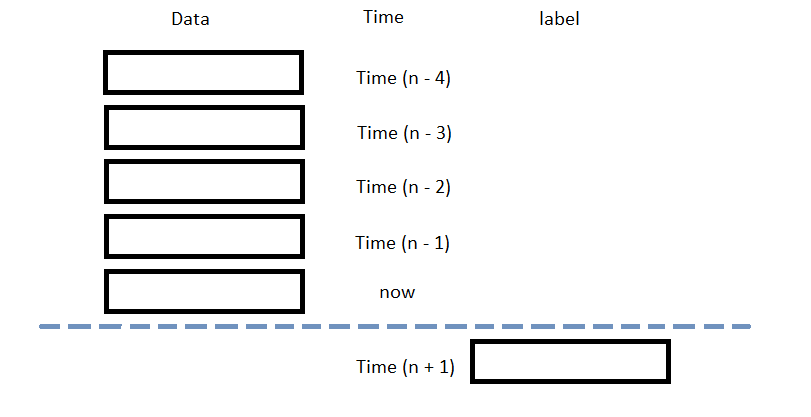

In this example, build a neural-network regression model that uses the following features and labels:

| Data Category | Description |

|---|---|

| Features | The last 5 closing prices. |

| Labels | The following day's closing price |

The following image shows the time difference between the features and labels:

Follow these steps to create a method to build the model:

- Define a subclass of

nn.Moduleto be the model. - Create an instance of the model and set its configuration to train on the GPU if it's available.

In this example, use the ReLU activation function for each layer.

# Define a feed-forward neural network with two hidden layers for learning complex features, ReLU

# activations to introduce non-linearity, and a single regression output for predicting continuous values.

class NeuralNetwork(nn.Module):

# Define the model structure.

def __init__(self):

super(NeuralNetwork, self).__init__()

self.flatten = nn.Flatten()

self.linear_relu_stack = nn.Sequential(

nn.Linear(5, 5), # input size, output size of the layer.

nn.ReLU(), # Relu non-linear transformation.

nn.Linear(5, 5),

nn.ReLU(),

nn.Linear(5, 1), # Output size = 1 for regression.

)

# Define the feed-forward prediction procedure.

def forward(self, x):

x = torch.from_numpy(x).float()

result = self.linear_relu_stack(x)

return result

# Use GPU if it's available for faster computation. Otherwise, fallback to CPU. device = 'cuda' if torch.cuda.is_available() else 'cpu' # Initialize the model on the selected device. self.model = NeuralNetwork().to(device)

Train Models

You can train the model at the beginning of your algorithm and you can periodically re-train it as the algorithm executes.

Warm Up Training Data

You need historical data to initially train the model at the start of your algorithm. To get the initial training data, in the Initializeinitialize method, make a history request.

# Fill a RollingWindow with 2 years of historical closing prices.

training_length = 252*2

self.training_data = RollingWindow(training_length)

history = self.history[TradeBar](self._symbol, training_length, Resolution.DAILY)

for trade_bar in history:

self.training_data.add(trade_bar.close)

Define a Training Method

To train the model, define a method that fits the model with the training data.

# Prepare feature and label data for training by processing the RollingWindow data into a time series.

def get_features_and_labels(self, n_steps=5):

close_prices = list(self.training_data)[::-1]

features = []

labels = []

for i in range(len(close_prices)-n_steps):

features.append(close_prices[i:i+n_steps])

labels.append(close_prices[i+n_steps])

features = np.array(features)

labels = np.array(labels)

return features, labels

def my_training_method(self):

features, labels = self.get_features_and_labels()

# Set the loss and optimization functions.

# In this example, use the mean squared error as the loss function and stochastic gradient descent as the optimizer.

loss_fn = nn.MSELoss()

learning_rate = 0.001

optimizer = torch.optim.SGD(self.model.parameters(), lr=learning_rate)

# Loop through each epoch and train the model.

epochs = 5

for t in range(epochs):

# Loop through each batch to train the model.

for batch, (feature, label) in enumerate(zip(features, labels)):

# Calculate the prediction and loss.

pred = self.model(feature)

real = torch.from_numpy(np.array(label).flatten()).float()

loss = loss_fn(pred, real)

# Perform backpropagation.

optimizer.zero_grad()

loss.backward()

optimizer.step()

Set Training Schedule

To train the model at the beginning of your algorithm, in the Initializeinitialize method, call the Traintrain method.

# Train the model initially to provide a baseline for prediction and decision-making. self.train(self.my_training_method)

To periodically re-train the model as your algorithm executes, in the Initializeinitialize method, call the Traintrain method as a Scheduled Event.

# Train the model every Sunday at 8:00 AM self.train(self.date_rules.every(DayOfWeek.SUNDAY), self.time_rules.at(8, 0), self.my_training_method)

Update Training Data

To update the training data as the algorithm executes, in the OnDataon_data method, add the current TradeBar to the RollingWindow that holds the training data.

# Add the latest bar to training data to ensure the model is trained with the most recent market data.

def on_data(self, slice: Slice) -> None:

if self._symbol in slice.Bars:

self.training_data.add(slice.bars[self._symbol].close)

Predict Labels

To predict the labels of new data, in the OnDataon_data method, get the most recent set of features and pass it to the model.

# Get the current feature set and make a prediction. features, __ = self.get_features_and_labels() prediction = self.model(features[-1].reshape(1, -1)) prediction = float(prediction.detach().numpy()[-1])

You can use the label prediction to place orders.

# Place orders based on the forecasted market direction.

if prediction > slice.bars[self._symbol].price:

self.set_holdings(self._symbol, 1)

elif prediction < slice.bars[self._symbol].price:

self.set_holdings(self._symbol, -1)

Save Models

Follow these steps to save PyTorch models into the Object Store:

- Set the key name of the model to be stored in the Object Store.

- Call the

GetFilePathget_file_pathmethod with the key. - Call the

dumpmethod the file path.

# Set the key to store the model in the Object Store so you can use it later. model_key = "model"

# Get the file path to correctly save and access the model in Object Store. file_name = self.object_store.get_file_path(model_key)

This method returns the file path where the model will be stored.

# Serialize the model and save it to the file. joblib.dump(self.model, file_name)

If you dump the model using the joblib module before you save the model, you don't need to retrain the model.

Load Models

You can load and trade with pre-trained PyTorch models that you saved in the Object Store. To load a PyTorch model from the Object Store, in the Initializeinitialize method, get the file path to the saved model and then call the load method.

# Load the PyTorch model from Object Store to use its saved state and update it with new data if needed.

def initialize(self) -> None:

if self.object_store.contains_key(model_key):

file_name = self.object_store.get_file_path(model_key)

self.model = joblib.load(file_name)

The ContainsKeycontains_key method returns a boolean that represents if the model_key is in the Object Store. If the Object Store does not contain the model_key, save the model using the model_key before you proceed.

Examples

The following examples demonstrate some common practices for using

PyTorch

library.

Example 1: Multi-layer Perceptron Model

The below algorithm makes use of

PyTorch

library to predict the future price movement using the previous 5 OHLCV data. The model is built with multi-layer perceptrons, ReLu activation function, and stochastic gradient descent optimization. It is trained using rolling 2-year data. To ensure the model applicable to the current market environment, we recalibrate the model on every Sunday.

import torch

from torch import nn

import joblib

class PyTorchExampleAlgorithm(QCAlgorithm):

def initialize(self) -> None:

self.set_start_date(2024, 9, 1)

self.set_end_date(2024, 12, 31)

self.set_cash(100000)

# Request SPY data for model training, prediction and trading.

self.symbol = self.add_equity("SPY", Resolution.DAILY).symbol

# 2-year data to train the model.

training_length = 252*2

self.training_data = RollingWindow(training_length)

# Warm up the training dataset to train the model immediately.

history = self.history[TradeBar](self.symbol, training_length, Resolution.DAILY)

for trade_bar in history:

self.training_data.add(trade_bar.close)

# Retrieve already trained model from object store to use immediately.

self._model_key = 'pytorch-example-model'

if self.live_mode and self.object_store.contains_key(self._model_key):

file_name = self.object_store.get_file_path(self._model_key)

self.model = joblib.load(file_name)

# Create a MLP model otherwise to predict price movement.

else:

device = 'cuda' if torch.cuda.is_available() else 'cpu'

self.model = NeuralNetwork().to(device)

# Train the model to use the prediction right away.

self.train(self.my_training_method)

# Recalibrate the model weekly to ensure its accuracy on the updated domain.

self.train(self.date_rules.every(DayOfWeek.SUNDAY), self.time_rules.at(8,0), self.my_training_method)

def get_features_and_labels(self, n_steps=5) -> None:

close_prices = list(self.training_data)[::-1]

# Stack the data for 5-day OHLCV data per each sample to train with.

features = []

labels = []

for i in range(len(close_prices)-n_steps):

features.append(close_prices[i:i+n_steps])

labels.append(close_prices[i+n_steps])

features = np.array(features)

labels = np.array(labels)

return features, labels

def my_training_method(self) -> None:

# Prepare the processed training data.

features, labels = self.get_features_and_labels()

# Set the loss and optimization functions.

# In this example, use the mean squared error as the loss function and stochastic gradient descent as the optimizer.

loss_fn = nn.MSELoss()

learning_rate = 0.001

optimizer = torch.optim.SGD(self.model.parameters(), lr=learning_rate)

# Create a for-loop to train for preset number of epoch.

epochs = 5

for t in range(epochs):

# Create a for-loop to fit the model per batch

for batch, (feature, label) in enumerate(zip(features, labels)):

# Compute prediction and loss.

pred = self.model(feature)

real = torch.from_numpy(np.array(label).flatten()).float()

loss = loss_fn(pred, real)

# Perform backpropagation.

optimizer.zero_grad()

loss.backward()

optimizer.step()

def on_data(self, slice: Slice) -> None:

if self.symbol in slice.bars:

self.training_data.add(slice.bars[self.symbol].close)

# Get prediction by the updated features.

features, __ = self.get_features_and_labels()

prediction = self.model(features[-1].reshape(1, -1))

if isinstance(prediction, np.ndarray):

prediction = float(prediction[-1]) # No need for detach() on NumPy arrays

elif isinstance(prediction, torch.Tensor):

prediction = float(prediction.detach().numpy()[-1])

# If the predicted direction is going upward, buy SPY.

if prediction > slice.bars[self.symbol].price:

self.set_holdings(self.symbol, 1)

# If the predicted direction is going downward, sell SPY.

elif prediction < slice.bars[self.symbol].price:

self.set_holdings(self.symbol, -1)

def on_end_of_algorithm(self) -> None:

# Store the model to object store to retrieve it in other instances in case the algorithm stops.

if not self.live_mode:

return

file_name = self.object_store.get_file_path(self._model_key)

joblib.dump(self.model, file_name)

self.object_store.save(self._model_key)

class NeuralNetwork(nn.Module):

# Model Structure

def __init__(self):

super(NeuralNetwork, self).__init__()

self.flatten = nn.Flatten()

self.linear_relu_stack = nn.Sequential(

nn.Linear(5, 5), # input size, output size of the layer

nn.ReLU(), # Relu non-linear transformation

nn.Linear(5, 5),

nn.ReLU(),

nn.Linear(5, 1), # Output size = 1 for regression

)

# Feed-forward training/prediction

def forward(self, x):

x = torch.from_numpy(x).float() # Convert to tensor in type float

result = self.linear_relu_stack(x)

return result