Popular Libraries

XGBoost

Get Historical Data

Get some historical market data to train and test the model. For example, to get data for the SPY ETF during 2020 and 2021, run:

qb = QuantBook() symbol = qb.add_equity("SPY", Resolution.DAILY).symbol history = qb.history(symbol, datetime(2020, 1, 1), datetime(2022, 1, 1)).loc[symbol]

Prepare Data

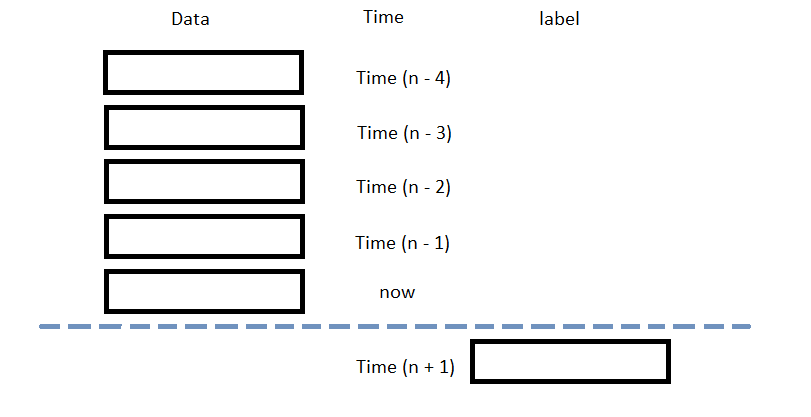

You need some historical data to prepare the data for the model. If you have historical data, manipulate it to train and test the model. In this example, use the following features and labels:

| Data Category | Description |

|---|---|

| Features | The last 5 closing prices |

| Labels | The following day's closing price |

The following image shows the time difference between the features and labels:

Follow these steps to prepare the data:

- Perform fractional differencing on the historical data.

- Loop through the

dfDataFrame and collect the features and labels. - Convert the lists of features and labels into

numpyarrays. - Standardize the features and labels

- Split the data into training and testing periods.

df = (history['close'] * 0.5 + history['close'].diff() * 0.5)[1:]

Fractional differencing helps make the data stationary yet retains the variance information.

n_steps = 5 features = [] labels = [] for i in range(len(df)-n_steps): features.append(df.iloc[i:i+n_steps].values) labels.append(df.iloc[i+n_steps])

features = np.array(features) labels = np.array(labels)

X = (features - features.mean()) / features.std() y = (labels - labels.mean()) / labels.std()

X_train, X_test, y_train, y_test = train_test_split(X, y)

Train Models

We're about to train a gradient-boosted random forest for future price prediction.

- Split the data for training and testing to evaluate our model.

- Format training set into XGBoost matrix.

- Train the model with parameters.

X_train, X_test, y_train, y_test = train_test_split(X, y)

dtrain = xgb.DMatrix(X_train, label=y_train)

params = { 'booster': 'gbtree', 'colsample_bynode': 0.8, 'learning_rate': 0.1, 'lambda': 0.1, 'max_depth': 5, 'num_parallel_tree': 100, 'objective': 'reg:squarederror', 'subsample': 0.8, } model = xgb.train(params, dtrain, num_boost_round=10)

Test Models

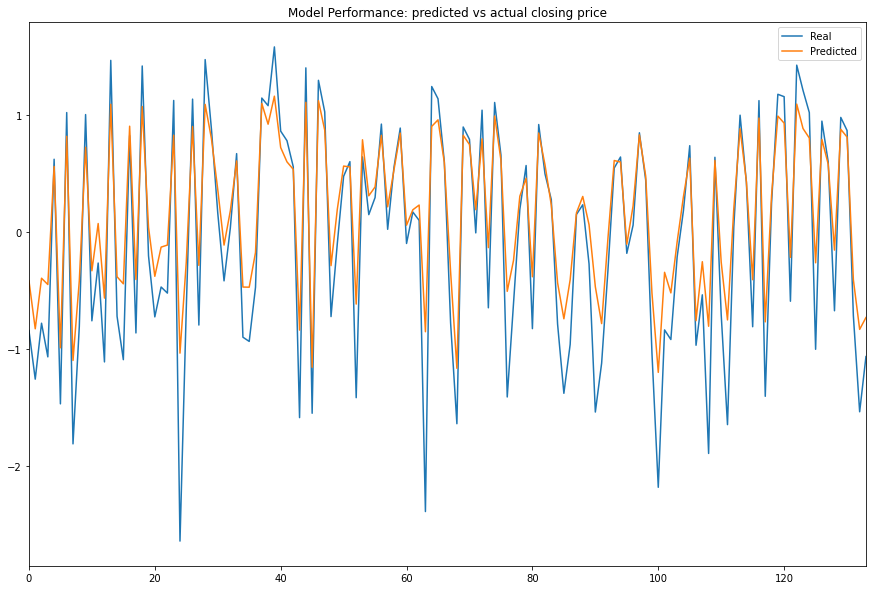

We then make predictions on the testing data set. We compare our Predicted Values with the Expected Values by plotting both to see if our Model has predictive power.

- Format testing set into XGBoost matrix.

- Predict with the testing set data.

- Plot the result.

dtest = xgb.DMatrix(X_test, label=y_test)

y_predict = model.predict(dtest)

df = pd.DataFrame({'Real': y_test.flatten(), 'Predicted': y_predict.flatten()}) df.plot(title='Model Performance: predicted vs actual closing price', figsize=(15, 10)) plt.show()

Store Models

Saving the Model

We dump the model using the joblib module and save it to Object Store file path. This way, the model doesn't need to be retrained, saving time and computational resources.

- Set the key name of the model to be stored in the Object Store.

- Call

GetFilePathwith the key's name to get the file path. - Call dump with the model and file path to save the model to the file path.

model_key = "model"

file_name = qb.object_store.get_file_path(model_key)

joblib.dump(model, file_name)

Loading the Model

Let's retrieve the model from the Object Store file path and load by joblib.

- Call the

contains_keymethod. - Call

GetFilePathwith the key's name to get the file path. - Call

loadwith the file path to fetch the saved model.

qb.object_store.contains_key(model_key)

This method returns a boolean that represents if the model_key is in the Object Store. If the Object Store does not contain the model_key, save the model using the model_key before you proceed.

file_name = qb.object_store.get_file_path(model_key)

loaded_model = joblib.load(file_name)

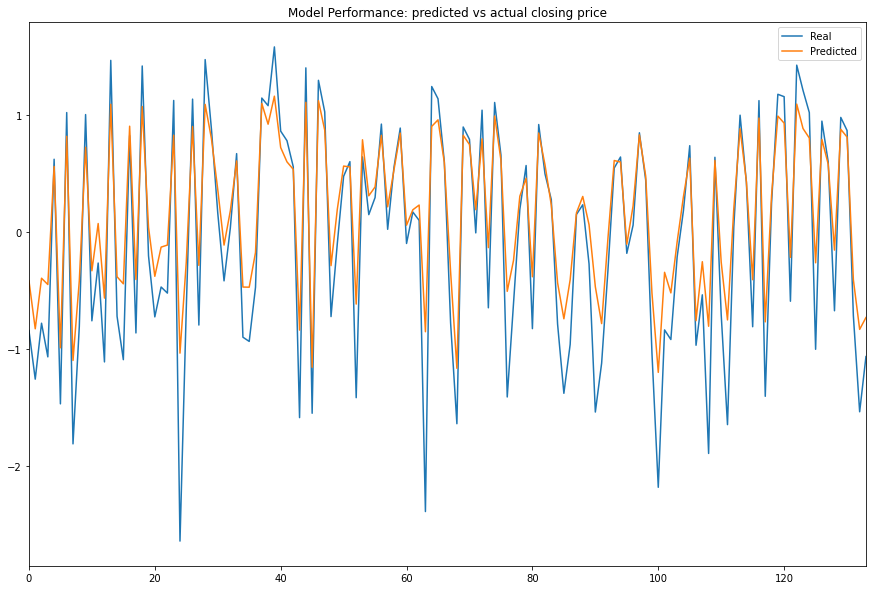

To ensure loading the model was successfuly, let's test the model.

y_pred = loaded_model.predict(dtest) df = pd.DataFrame({'Real': y_test.flatten(), 'Predicted': y_pred.flatten()}) df.plot(title='Model Performance: predicted vs actual closing price', figsize=(15, 10))

Examples

The following examples demonstrate some common practices for using the XGBoost library.

Example 1: Predict Next Price

The following research notebook uses XGBoost machine learning model to predict the next day's close price by the previous 5 days' daily closes.

# Import the XGBoost library and others. import xgboost as xgb from sklearn.model_selection import train_test_split import joblib # Instantiate the QuantBook for researching. qb = QuantBook() # Request the daily SPY history with the date range to be studied. symbol = qb.add_equity("SPY", Resolution.DAILY).symbol history = qb.history(symbol, datetime(2020, 1, 1), datetime(2022, 1, 1)).loc[symbol] # Obtain the daily fractional differencing in close price to be the features and labels. df = (history['close'] * 0.5 + history['close'].diff() * 0.5)[1:] # We use the previous 5 day returns as the features to be studied. # Get the 1-day forward return as the labels for the machine to learn. n_steps = 5 features = [] labels = [] for i in range(len(df)-n_steps): features.append(df.iloc[i:i+n_steps].values) labels.append(df.iloc[i+n_steps]) # Clean up and process the data for faster convergence. features = np.array(features) labels = np.array(labels) X = (features - features.mean()) / features.std() y = (labels - labels.mean()) / labels.std() # Split the data as a training set and test set for validation. X_train, X_test, y_train, y_test = train_test_split(X, y) # Format training set into XGBoost matrix. dtrain = xgb.DMatrix(X_train, label=y_train) # Train the model with parameters. params = { 'booster': 'gbtree', 'colsample_bynode': 0.8, 'learning_rate': 0.1, 'lambda': 0.1, 'max_depth': 5, 'num_parallel_tree': 100, 'objective': 'reg:squarederror', 'subsample': 0.8, } model = xgb.train(params, dtrain, num_boost_round=10) # Format testing set into XGBoost matrix to test it. dtest = xgb.DMatrix(X_test, label=y_test) # Predict with the testing set data. y_predict = model.predict(dtest) # Plot the result. df = pd.DataFrame({'Real': y_test.flatten(), 'Predicted': y_predict.flatten()}) df.plot(title='Model Performance: predicted vs actual closing price', figsize=(15, 10)) plt.show() # Store the model in the object store to allow accessing the model in the next research session or in the algorithm for trading. model_key = "model" file_name = qb.object_store.get_file_path(model_key) joblib.dump(model, file_name)