I just whipped up my first options algo as a learning exercise, and I’d love some feedback.

I’ve only written in Python once before, around 100 years ago, so I’d appreciate specific pointers on code quality, structure, redundancy (if any), shaving lines of code, speeding up execution, etc. The logic itself is simple enough:

Entry:

30 Mins before market close, if the portfolio is empty, open as many single OTM call options as possible, with strikes that are 10% above the underlying, expiring in 120 days.

Exit:

30 Mins after market open, for each call in the portfolio, if the call is ITM or there are less than 30 days till expiry, liquidate the position.

I’ve commented the code comprehensively so I hope this also serves as a guide to other n00bs. Open to any questions.

Thanks!

[PS] I’m not looking for feedback on the strategy logic itself —It is deliberately overfit to ZM's 2020 bull run, and I would not live trade as is. However, one could possibly use this strategy in combination with universe selection, to roll 'Mini Leaps' on high momentum stocks that are currenty trending. To avoid overfitting, you could select strikes & expiration dates based on some function of volatility.

Cole S

Hi Ikezi,

The code looks very clean! I was planning on working on an options "scalping" strategy this weekend so glad to see this and get some ideas.

As you said in your PS, the next step would be using some form of universe selection and then you will then need to have a custom SymbolData object to hold information about each ticker.

Also FYI that each time you call the Greeks function it will lazy load the Greeks and if you reference them every time step it will slow you down, so use them sparingly. It does not look like you are using them currently, but I usually use Delta as selection criteria rather than % of price. I think that would make more sense when using a universe instead of a single equity.

.ekz.

Hi kctrader

Thanks for the kind words and this timely feedback, as I am about to start working on the universe selection logic. I was wondering how best to track state for each ticker, so your comment on SymbolData is perfect timing. thanks for this.

On the greeks, I have seen people complaining about speed with greeks on QC, so that is why I used % of price. In real life (manual trading), i use delta. It sounds like i will have to deal with the pain of greeks sometime soon. Arggh.

Leandro Maia

Ikezi,

thanks for sharing. I'm trying to learn more about options and it was interisting to see your implementation. If you need support with the universe and SymbolData, I can try to help.

.ekz.

Leandro Maia

Thanks for the offer. I have seen some of your comments in other threads, and you have great ideas. I can also answer any questions you have on optionsl trading (in general, not on QC).

I just finished the universe bootcamp course, but it would be great to see how people do it in practice, in real algos. I will sift through forum posts, but I would appreciate you sharing any public algos that come to mind.

For starters, I'm considering filtering a universe of stocks that meet the following criteria, with a goal of catching some of those strong short-term uptrends:

I'm less sure about 4 & 5, but would love to hear any thoughts / see any code.

Thanks in advance!

Leandro Maia

Ikezi,

the code attached attempts to perform what you suggested for universe selection. QC doens't have data for future earnings report release dates, so I set a maximum number of days after the latest release as a limit. The final selection is done at self.Selection function and selected symbols are added to self.selected list. Let me know if it is useful.

.ekz.

Wow. Leandro Maia

I did not expect this --I was hoping for some pointers at most. You have saved me a lot of time. Thanks a lot!

Give me some time to understand this, intergrate it, and get back to you.

.ekz.

Thanks again for this.

It makes sense, the filtered selection works well, and you’ve given me a good foundation that I can extend to build more complex logic. Here are a few updates / thoughts / questions:

Changes made to new version: I added the universe selection, so now we only trade the most recent symbol added from today's filtered stocks. I also added order messages, and a number of configuration options, including things like roiTarget, maxDaysInTrade, etc. These are still in progress though, as my focus was largely on universe selection. You can see this from the poor performance of the algo :-)

On Indicator periods: Since everything is on the minute resolution, i wondered how best to codify periods for other resolutions like 30Mins. I noticed you transformed the period count, based on minutes --so the 20-EMA on the 30min time frame becomes a 600 EMA on the 1minute time frame. What are your thoughts on the accuracy of this translation? It seems to work well, but how much different will this be, compared to a true 20EMA on the 30 min time frame?

On QuantConnect Speed: I expected some slow down but I’m surprised to see that LEAN slows down significantly when we introduce universe selection. I’m a little saddened by this. I’ve learned that using greeks will make execution even MORE slow, and greeks are critical for my strategies. To optimize for execution speed, I’ll likely have to write more unreadable code (a lot more in-line logic). I’m open to any other optimization tips.

Next Steps:

See attached for the most recent (rough) work in progress, with your logic integrated, and some refactoring. Note that it's no longer 'rolling' contracts for the same ticker, so i've renamed it to a 'trader'.

Cole S

You can get the indicators from the timeframe you want by using consolidators. In the code creating Symbol you can call the helper method for creating consolidators, like so: algorithm.Consolidate(symbol, timedelta(minutes=30), consolidat_30m_handler). The handler function is where you will update 30m indicators.

That will give you back a consolidator object that is already subscribed to the data feed. If you don't use the helper method there are a few steps.

At this point however your indicators cannot automatically update. So rather than creating the indicators with the helper methods, you simply create the inidicators manually (ema30 = ExponentialMovingAverage(30), for example) and then in the handler you call ema30.Update() and pass in Close or whatever value you are using.

.ekz.

Thanks for the insight, kctraderI will give that a shot.

If I understand this correctly, does this mean we will no longer need call "algorithm.RegisterIndicator" ? It seems this was how we are currently subscribing to updates. If we are going to manually update, I can remove this.

Am i correct, or is there some other use for it?

Leandro Maia

Ikezi,

we can add the consolidator and still keep the indicator registration to have automatic update and avoid the need to do it manually. I'll propose an implementation and for curiosity I'll compare with the results without the consolidator.

Leandro Maia

Ikezi,

follows an attempt to add consolidators. Unfortunatelly I'm not being able to perform the warm-up. If the warm-up bit (lines 128-140) are commented out then the code works. It would be nice if someone else could have a look.

I also noted that there's difference in the indicators calculated with an without consolidation. In my opinion this shouldn't be expected but I couldn't find an explanation.

Leandro Maia

For some reason I'm not being able to attach. I'll try again later.

Leandro Maia

Trying again.

Cole S

Nice work Leandro. You can combine these two lines:

self.consolidator_5min = TradeBarConsolidator(timedelta(minutes=5)) algorithm.SubscriptionManager.AddConsolidator(symbol, self.consolidator_5min)into:

self.consolidator_5min = algorithm.Consolidate(symbol, timedelta(minutes=5), self.consolidator_5m)The internal lean code also checks to see if a consolidator already exists rather than checking for a duplicate which is nice.

The reason the values are different is because having an indicator run on minute resolution for a longer time period has many more data points and changes the indicator noticeably. Standard Deviation over 1 minute bars for 120 periods is much less than the Standard Deviation over 30 minute bars for 4 periods even though they span the same duration.

.ekz.

Thanks for sharing this, I completed a consolidator version last night as well (see attached). At first i didn’t like updating manually, but i now appreciate that i have additional logic control points in the code, where i can additionally manage state if need be. I do need to add a dispose method though, so thanks for that.

I think some discrepancies with results are to be expected, since we are making calculations on different price points. However:

Leandro Maia

Ikezi,

in the implementation below I added back the indicators warmup to your code (lines 133-141). However it is not being done through the consolidator. We can amend that when we find a solution. I also suggest you to use the logic in lines 90-92 to avoid division by zero.

With your implementation style for the consolidators I'm in doubt on how to use the dispose method, because they are not being added to variables...

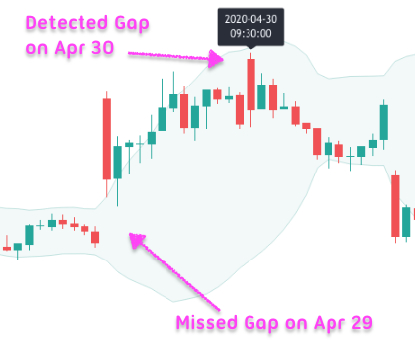

Regarding GILD, in April 30th I can see a gap, and the GapUp was flagged True. The same for the 29th. Maybe it was selected in one day and not the other because of the other conditions...

I don't have experience with splits...

.ekz.

Thanks Leandro,

I'll add the logic for zero division and warm up.

Regarding Gaps: I noticed the gap logic wasnt as expected. Currently, at market open, it is looking at yesterdays close in relation to current close .

gap = (self.Securities[symbol].Close - self.data[symbol].LastClose) / self.data[symbol].LastCloseWe are calling this after minute into trading day, so does "self.Securities[symbol].Close" refer to the close of the first 1Min bar?

If so, is this intentional? I would have expected that, to look for gap ups, we look at the difference between yesterdays close and Today's open.

.ekz.

Also, regarding GILD, there is something else going on. It does gap up, but it doesnt break the Bollinger Band, so it shouldnt be flagged. There's someting odd going on. I'll try to get to the bottom of it.

On the one hand, it's happening with our minute-resolution approach (without consolidation) because, as kctrader pointed out, the BB bands on 1Min time frame will be much narrower than on the 30Min timeframe.

On the other hand, it is also selecting GILD when we use the consolidation approach, but that is likely due to something not working quite right with our consolidation logic.

I will try to troubleshoot -- I suspect a race condition on when consolidation events fire vs our when our AtMarketOpen routine fires, but i could be wrong. Will explore.

Cole S

Leandro Maia the algorithm.Consolidate() method returns the consolidator. You can then store that consolidator the same way you did in your previous code.

Cole S

I added more details to the debug:

2020-04-30 10:00:00 GILD R735QTJ8XC9X Thu Apr 30 10:00:00 2020 GapUp:True EMA:82.12711 SMA:82.06204 BB:0.0 Close:82.012990473

Your BB was 0. It did not have enough data yet (needs to be warmed up). I added an IsReady check to exclude those that are not warmed up.

.ekz.

The material on this website is provided for informational purposes only and does not constitute an offer to sell, a solicitation to buy, or a recommendation or endorsement for any security or strategy, nor does it constitute an offer to provide investment advisory services by QuantConnect. In addition, the material offers no opinion with respect to the suitability of any security or specific investment. QuantConnect makes no guarantees as to the accuracy or completeness of the views expressed in the website. The views are subject to change, and may have become unreliable for various reasons, including changes in market conditions or economic circumstances. All investments involve risk, including loss of principal. You should consult with an investment professional before making any investment decisions.

To unlock posting to the community forums please complete at least 30% of Boot Camp.

You can continue your Boot Camp training progress from the terminal. We hope to see you in the community soon!